THE CAPABILITIES AND DOWNSIDES OF DEEP LEARNING

Why are Meticulous Model Design, Robust Data Collection Practices, and Model Interpretability Tools so important in Deep Learning?

Deep learning, a subfield of machine learning, is a powerful tool that can be applied to a wide array of tasks across various fields. These include image recognition, natural language processing (NLP), autonomous vehicles, recommendation systems, and generative models, to name a few.

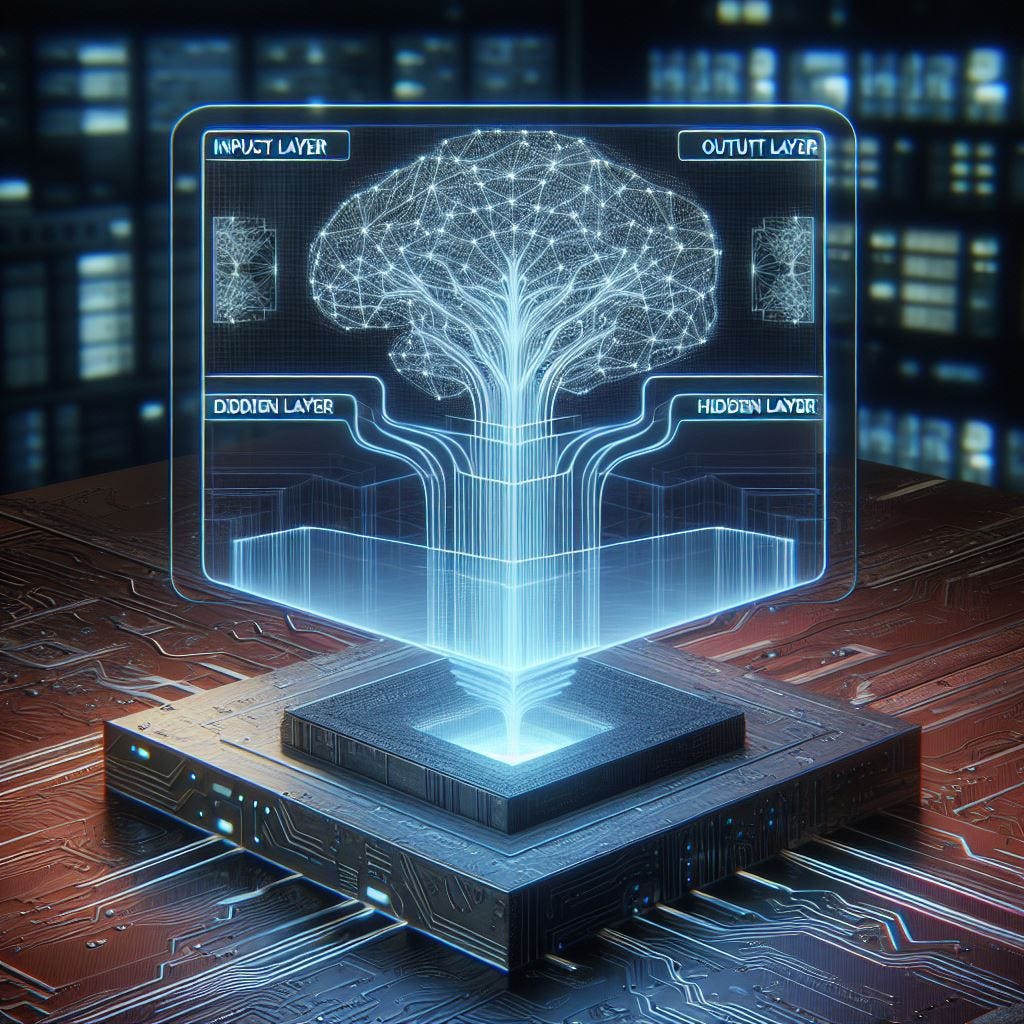

Deep learning algorithms are inspired by the structure and function of the human brain's neural networks. These artificial neural networks consist of multiple layers, hence the term 'deep' in deep learning. Each layer transforms the input data to extract increasingly higher-level features until the desired output is achieved.

Artificial Neural Networks excel at processing unstructured data such as images, audio, and text. They have been instrumental in driving many recent advancements in AI technology.

So, how do these artificial neural networks work? A neural network is composed of layers of nodes, or 'neurons'. Each layer receives input from the previous layer, performs some computation, and passes its output to the next layer.

Here's a bit more detail:

- The 'Input Layer': This is the layer through which the neural network takes in data. Each neuron in the input layer represents a unique feature in the dataset.

- The 'Output Layer': This layer provides the result for given inputs.

- The 'Hidden Layers': These layers perform mathematical computations and transfer information from the input nodes to the output nodes. A deep learning model has multiple hidden layers, each of which transforms the input data to extract increasingly abstract and complex features. The 'depth' of a neural network refers to the number of its hidden layers.

Each neuron in a layer is connected to every neuron in the next layer. These connections are assigned 'weights', which the model adjusts during the training process to minimize the difference between its predictions and the actual values. Adjusting these weights enables neural networks to learn complex patterns and relationships.

The discrepancy between the model's predictions and the actual data is measured by an objective function, also known as a loss function or cost function. The goal of training a deep learning model is to find the model parameters that minimize the objective function.

The choice of objective function depends on the specific task and the type of data. While we generally aim to minimize the objective function, in some cases, such as when the function represents a measure of model accuracy, we might aim to maximize it instead.

Despite its power, deep learning has several downsides. Firstly, the models require large amounts of data to train effectively. This can be a limitation in scenarios where data is scarce or hard to collect. Furthermore, training deep learning models can be computationally intensive and time-consuming, particularly for large datasets and complex models. Lastly, deep learning models, especially those with many layers, can be difficult to interpret. This is often referred to as the 'black box' problem.

One of the most significant challenges is that if the training data is biased, the model is likely to adopt these biases, which can lead to unfair or unethical results.

These drawbacks of deep learning underscore the importance of meticulous model design, robust data collection practices, and the use of techniques such as regularization and model interpretability tools.